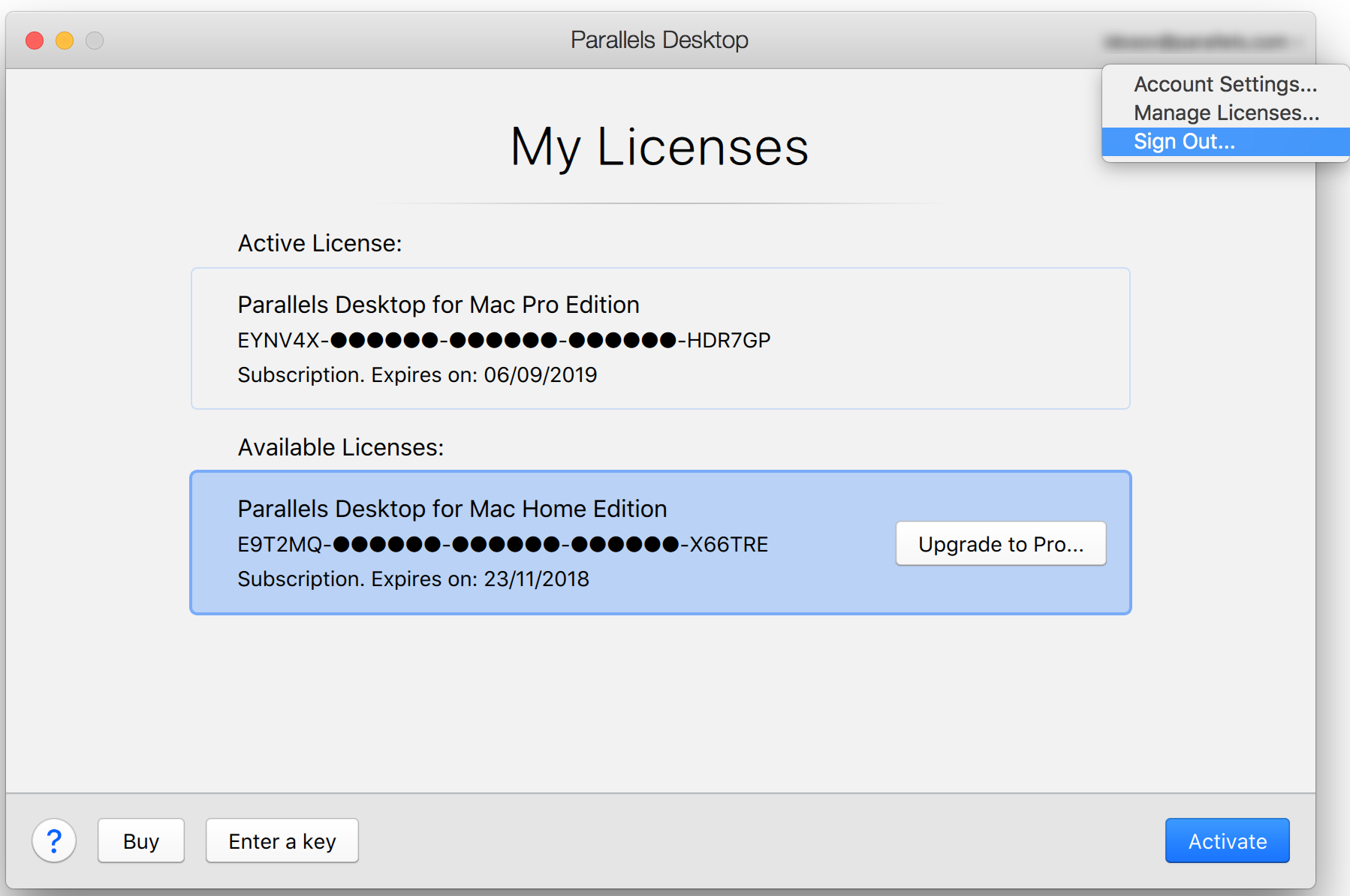

Support Notice: Parallels Desktop 3.0 For Mac

Welcome to H2O 3 H2O is an open source, in-memory, distributed, fast, and scalable machine learning and predictive analytics platform that allows you to build machine learning models on big data and provides easy productionalization of those models in an enterprise environment. H2O’s core code is written in Java.

Inside H2O, a Distributed Key/Value store is used to access and reference data, models, objects, etc., across all nodes and machines. The algorithms are implemented on top of H2O’s distributed Map/Reduce framework and utilize the Java Fork/Join framework for multi-threading. The data is read in parallel and is distributed across the cluster and stored in memory in a columnar format in a compressed way.

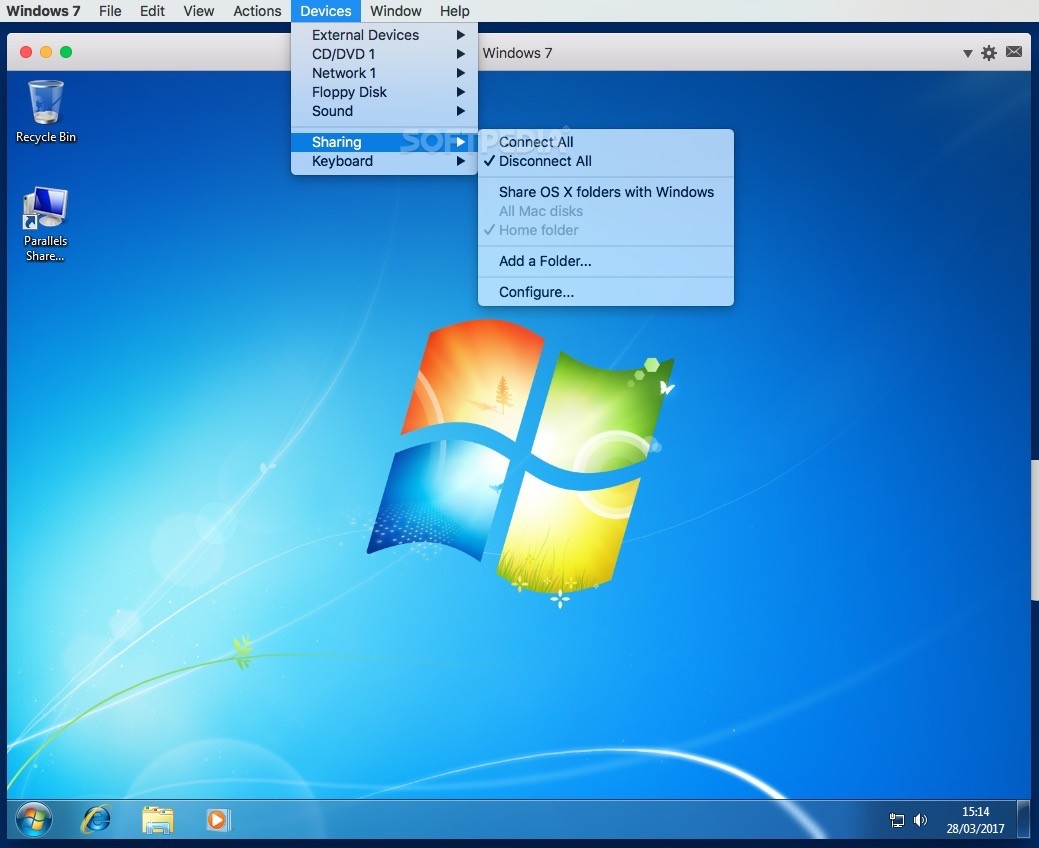

Parallels Desktop for Mac, by Parallels, is software providing hardware virtualization for. On January 10, 2007, Parallels Desktop 3.0 for Mac was awarded “Best in Show” at MacWorld 2007. Parallels Desktop notifications; Notification Center support for Windows 8 toast notifications; Mountain Lion Dictation in Windows. Parallel Desktop 13 Crack With Activation Key 2018 Mac Free Download Parallels Desktop Crack enables users to run a variety of windows programs for their small business or have changed to Mac & can’t find replacements for whatever they want. Here, it’s essential to be aware that in prior models, the parallel desktop computer has prided itself on its capacity to bring the new and various.

H2O’s data parser has built-in intelligence to guess the schema of the incoming dataset and supports data ingest from multiple sources in various formats. H2O’s REST API allows access to all the capabilities of H2O from an external program or script via JSON over HTTP. The Rest API is used by H2O’s web interface (Flow UI), R binding (H2O-R), and Python binding (H2O-Python). The speed, quality, ease-of-use, and model-deployment for the various cutting edge Supervised and Unsupervised algorithms like Deep Learning, Tree Ensembles, and GLRM make H2O a highly sought after API for big data data science. Requirements At a minimum, we recommend the following for compatibility with H2O:.

Operating Systems:. Windows 7 or later. OS X 10.9 or later. Ubuntu 12.04.

RHEL/CentOS 6 or later. Languages: Scala, R, and Python are not required to use H2O unless you want to use H2O in those environments, but Java is always required. Supported versions include:. Java 7 or later. To build H2O or run H2O tests, the 64-bit JDK is required. To run the H2O binary using either the command line, R, or Python packages, only 64-bit JRE is required. Both of these are available on the.

Scala 2.10 or later. R version 3 or later. Python 2.7.x, 3.5.x, 3.6.x. Browser: An internet browser is required to use H2O’s web UI, Flow.

Supported versions include the latest version of Chrome, Firefox, Safari, or Internet Explorer. New Users If you’re just getting started with H2O, here are some links to help you learn more:.: First things first - download a copy of H2O here by selecting a build under “Download H2O” (the “Bleeding Edge” build contains the latest changes, while the latest alpha release is a more stable build), then use the installation instruction tabs to install H2O on your client of choice (standalone, R, Python, Hadoop, or Maven). For first-time users, we recommend downloading the latest alpha release and the default standalone option (the first tab) as the installation method. Make sure to install Java if it is not already installed.

Tutorials: To see a step-by-step example of our algorithms in action, select a model type from the following list:.: This section describes our new intuitive web interface, Flow. This interface is similar to IPython notebooks, and allows you to create a visual workflow to share with others.: This document describes some of the additional options that you can configure when launching H2O (for example, to specify a different directory for saved Flow data, to allocate more memory, or to use a flatfile for quick configuration of a cluster).: This section describes the science behind our algorithms and provides a detailed, per-algo view of each model type.: The GitHub Help system is a useful resource for becoming familiar with Git. # Before starting Python, run the following commands to install dependencies. # Prepend these commands with `sudo` only if necessary. H2o-3 user$ sudo pip install -U requests h2o-3 user$ sudo pip install -U tabulate h2o-3 user$ sudo pip install -U future h2o-3 user$ sudo pip install -U six # Start python h2o-3 user$ python # Run the following command to import the H2O module: import h2o # Run the following command to initialize H2O on your local machine (single-node cluster). h2o.init # If desired, run the GLM, GBM, or Deep Learning demo h2o.demo('glm') h2o.demo('gbm') h2o.demo('deeplearning') # Import the Iris (with headers) dataset.

path = 'smalldata/iris/iriswheader.csv' iris = h2o.importfile(path=path) # View a summary of the imported dataset. iris.summary sepallen sepalwid petallen petalwid class - - - - - 5.1 3.5 1.4 0.2 Iris-setosa 4.9 3 1.4 0.2 Iris-setosa 4.7 3.2 1.3 0.2 Iris-setosa 4.6 3.1 1.5 0.2 Iris-setosa 5 3.6 1.4 0.2 Iris-setosa 5.4 3.9 1.7 0.4 Iris-setosa 4.6 3.4 1.4 0.3 Iris-setosa 5 3.4 1.5 0.2 Iris-setosa 4.4 2.9 1.4 0.2 Iris-setosa 4.9 3.1 1.5 0.1 Iris-setosa 150 rows x 5 columns. Experienced Users If you’ve used previous versions of H2O, the following links will help guide you through the process of upgrading to H2O-3.: This section provides a comprehensive guide to assist users in upgrading to H2O 3.0.

It gives an overview of the changes to the algorithms and the web UI introduced in this version and describes the benefits of upgrading for users of R, APIs, and Java.: This document describes the most recent changes in the latest build of H2O. It lists new features, enhancements (including changed parameter default values), and bug fixes for each release, organized by sub-categories such as Python, R, and Web UI.: If you’re interested in contributing code to H2O, we appreciate your assistance! This document describes how to access our list of Jiras that are suggested tasks for contributors and how to contact us.

Parallels Desktop For Mac Crack

Sparkling Water Users Sparkling Water is a gradle project with the following submodules:. Core: Implementation of H2OContext, H2ORDD, and all technical integration code.

Examples: Application, demos, examples. ML: Implementation of MLlib pipelines for H2O algorithms. Assembly: Creates “fatJar” composed of all other modules. py: Implementation of (h2o) Python binding to Sparkling Water The best way to get started is to modify the core module or create a new module, which extends a project. Users of our Spark-compatible solution, Sparkling Water, should be aware that Sparkling Water is only supported with the latest version of H2O.

For more information about Sparkling Water, refer to the following links. Sparkling Water is versioned according to the Spark versioning, so make sure to use the Sparkling Water version that corresponds to the installed version of Spark. Getting Started with Sparkling Water.: Go here to download Sparkling Water. Sparkling Water Documentation for, or: Read this document first to get started with Sparkling Water. Launch on Hadoop and Import from HDFS (, or ): Go here to learn how to start Sparkling Water on Hadoop.: Go here for demos and examples.: Go here to view a demo that uses Scala to create a K-means model.: Go here to view a demo that uses Scala to create a GBM model.: Follow these instructions to run Sparkling Water on a YARN cluster.: This short tutorial describes project building and demonstrates the capabilities of Sparkling Water using Spark Shell to build a Deep Learning model. Sparkling Water FAQ for, or: This FAQ provides answers to many common questions about Sparkling Water.: This illustrated tutorial describes how to use RStudio to connect to Sparkling Water.

Python Users Pythonistas will be glad to know that H2O now provides support for this popular programming language. Python users can also use H2O with IPython notebooks. For more information, refer to the following links. Instructions for using H2O with Python are available in the section and on the. Select the version you want to install (latest stable release or nightly build), then click the Install in Python tab.: This document represents the definitive guide to using Python with H2O.: This notebook demonstrates the use of grid search in Python.

R Users Currently, the only version of R that is known to be incompatible with H2O is R version 3.1.0 (codename “Spring Dance”). If you are using that version, we recommend upgrading the R version before using H2O. To check which version of H2O is installed in R, use versions::installed.versions('h2o'). and Documentation: This document contains all commands in the H2O package for R, including examples and arguments. It represents the definitive guide to using H2O in R.: This illustrated tutorial describes how to use RStudio to connect to Sparkling Water.: Download this PDF to keep as a quick reference when using H2O in R. Note: If you are running R on Linux, then you must install libcurl, which allows H2O to communicate with R.

We also recommend disabling SElinux and any firewalls, at least initially until you have confirmed H2O can initialize. On Ubuntu, run: apt-get install libcurl4-openssl-dev. On CentOs, run: yum install libcurl-devel. API Users API users will be happy to know that the APIs have been more thoroughly documented in the latest release of H2O and additional capabilities (such as exporting weights and biases for Deep Learning models) have been added. REST APIs are generated immediately out of the code, allowing users to implement machine learning in many ways. For example, REST APIs could be used to call a model created by sensor data and to set up auto-alerts if the sensor data falls below a specified threshold.: This document describes how the REST API commands are used in H2O, versioning, experimental APIs, verbs, status codes, formats, schemas, payloads, metadata, and examples.: This document represents the definitive guide to the H2O REST API.: This document represents the definitive guide to the H2O REST API schemas. Developers If you’re looking to use H2O to help you develop your own apps, the following links will provide helpful references.

Parallels Desktop For Mac Download

For the latest version of IDEA IntelliJ, run./gradlew idea, then click File Open within IDEA. Select the.ipr file in the repository and click the Choose button. For older versions of IDEA IntelliJ, run./gradlew idea, then Import Project within IDEA and point it to the. Note: This process will take longer, so we recommend using the first method if possible. For JUnit tests to pass, you may need multiple H2O nodes. Create a “Run/Debug” configuration with the following parameters.

Type: Application Main class: H2OApp Use class path of module: h2o - app After starting multiple “worker” node processes in addition to the JUnit test process, they will cloud up and run the multi-node JUnit tests.: Detailed instructions on how to build and launch H2O, including how to clone the repository, how to pull from the repository, and how to install required dependencies. You can view instructions for using H2O with Maven on the. Select the version of H2O you want to install (latest stable release or nightly build), then click the Use from Maven tab.: This page provides information on how to build a version of H2O that generates the correct IDE files.: Apps.h2o.ai is designed to support application developers via events, networking opportunities, and a new, dedicated website comprising developer kits and technical specs, news, and product spotlights.: This page provides template info for projects created in Java, Scala, or Sparkling Water. H2O Scala API Developer Documentation for or: The definitive Scala API guide for H2O.: This blog post by Cliff walks you through building a new algorithm, using K-Means, Quantiles, and Grep as examples.: Learn more about performance characteristics when implementing new algorithms.: If you’re interested in contributing code to H2O, we appreciate your assistance! This document describes how to access our list of Jiras that contributors can work on and how to contact us. Note: To access this link, you must have an.

Supported Versions. CDH 5.4. CDH 5.5.

CDH 5.6. CDH 5.7. CDH 5.8. CDH 5.9. CDH 5.10. CDH 5.13.

CDH 5.14. HDP 2.2. HDP 2.3. HDP 2.4.

HDP 2.5. HDP 2.6. MapR 4.0. MapR 5.0. MapR 5.1.

MapR 5.2. IOP 4.2 Important Points to Remember:. The command used to launch H2O differs from previous versions. (Refer to the section.). Launching H2O on Hadoop requires at least 6 GB of memory. Each H2O node runs as a mapper. Run only one mapper per host.

There are no combiners or reducers. Each H2O cluster must have a unique job name.mapperXmx, -nodes, and -output are required. Root permissions are not required - just unzip the H2O.zip file on any single node.

Prerequisite: Open Communication Paths H2O communicates using two communication paths. Verify these are open and available for use by H2O. Path 1: mapper to driver Optionally specify this port using the -driverport option in the hadoop jar command (see “Hadoop Launch Parameters” below).

This port is opened on the driver host (the host where you entered the hadoop jar command). By default, this port is chosen randomly by the operating system. If you don’t want to specify an exact port but you still want to restrict the port to a certain range of ports, you can use the option -driverportrange. Path 2: mapper to mapper Optionally specify this port using the -baseport option in the hadoop jar command (refer to below.

This port and the next subsequent port are opened on the mapper hosts (the Hadoop worker nodes) where the H2O mapper nodes are placed by the Resource Manager. By default, ports 54321 and 54322 are used. The mapper port is adaptive: if 54321 and 54322 are not available, H2O will try 54323 and 54324 and so on. The mapper port is designed to be adaptive because sometimes if the YARN cluster is low on resources, YARN will place two H2O mappers for the same H2O cluster request on the same physical host. For this reason, we recommend opening a range of more than two ports (20 ports should be sufficient). Hadoop jar h2odriver. Jar - nodes 1 - mapperXmx 6 g The above command launches a 6g node of H2O.

We recommend you launch the cluster with at least four times the memory of your data file size. mapperXmx is the mapper size or the amount of memory allocated to each node. Specify at least 6 GB. nodes is the number of nodes requested to form the cluster. output is the name of the directory created each time a H2O cloud is created so it is necessary for the name to be unique each time it is launched.

To monitor your job, direct your web browser to your standard job tracker Web UI. To access H2O’s Web UI, direct your web browser to one of the launched instances. If you are unsure where your JVM is launched, review the output from your command after the nodes has clouded up and formed a cluster. Any of the nodes’ IP addresses will work as there is no master node.

Hadoop Launch Parameters.h -help: Display help.jobname: Specify a job name for the Jobtracker to use; the default is H2Onnnnn (where n is chosen randomly).principal -keytab -runasuser: Optionally specify a Kerberos principal and keytab or specify the runasuser parameter to start clusters on behalf of the user/principal. Note that using runasuser implies that the Hadoop cluster does not have Kerberos.driverif driver callback interface: Specify the IP address for callback messages from the mapper to the driver.driverport callback interface: Specify the port number for callback messages from the mapper to the driver.driverportrange callback interface: Specify the allowed port range of the driver callback interface, eg.network ,: Specify the IPv4 network(s) to bind to the H2O nodes; multiple networks can be specified to force H2O to use the specified host in the Hadoop cluster. 10.1.2.0/24 allows 256 possibilities.timeout: Specify the timeout duration (in seconds) to wait for the cluster to form before failing. Note: The default value is 120 seconds; if your cluster is very busy, this may not provide enough time for the nodes to launch. If H2O does not launch, try increasing this value (for example, -timeout 600).disown: Exit the driver after the cluster forms. Note: For Qubole users who include the -disown flag, if your cluster is dying right after launch, add -Dmapred.jobclient.killjob.onexit=false as a launch parameter.notify: Specify a file to write when the cluster is up. The file contains the IP and port of the embedded web server for one of the nodes in the cluster.

All mappers must start before the H2O cloud is considered “up”.mapperXmx: Specify the amount of memory to allocate to H2O (at least 6g).extramempercent: Specify the extra memory for internal JVM use outside of the Java heap. This is a percentage of mapperXmx.n -nodes: Specify the number of nodes.nthreads: Specify the number of CPUs to use. This defaults to using all CPUs on the host, or you can enter a positive integer.baseport: Specify the initialization port for the H2O nodes. The default is 54321.license: Specify the directory of local filesytem location and the license file name.o -output: Specify the HDFS directory for the output.flowdir: Specify the directory for saved flows. By default, H2O will try to find the HDFS home directory to use as the directory for flows. If the HDFS home directory is not found, flows cannot be saved unless a directory is specified using -flowdir.portoffset: This parameter allows you to specify the relationship of the API port (“web port”) and the internal communication port. The h2o port and API port are derived from each other, and we cannot fully decouple them.

Instead, we allow you to specify an offset such that h2o port = api port + offset. This allows you to move the communication port to a specific range that can be firewalled.proxy: Enables Proxy mode.reporthostname: This flag allows the user to specify the machine hostname instead of the IP address when launching H2O Flow. This option can only be used when H2O on Hadoop is started in Proxy mode (with -proxy). JVM arguments.ea: Enable assertions to verify boolean expressions for error detection.verbose:gc: Include heap and garbage collection information in the logs. Deprecated in Java 9, removed in Java 10.XX:+PrintGCDetails: Include a short message after each garbage collection.

Deprecated in Java 9, removed in Java 10.Xlog:gc=info: Prints garbage collection information into the logs. Introduced in Java 9. Usage enforced since Java 10. A replacement for -verbose:gc and -XX:+PrintGCDetails tags which are deprecated in Java 9 and removed in Java 10. Accessing S3 Data from Hadoop H2O launched on Hadoop can access S3 Data in addition to to HDFS. To enable access, follow the instructions below. Edit Hadoop’s core-site.xml, then set the HADOOPCONFDIR environment property to the directory containing the core-site.xml file.

For an example core-site.xml file, refer to. Typically, the configuration directory for most Hadoop distributions is /etc/hadoop/conf. You can also pass the S3 credentials when launching H2O with the Hadoop jar command. Use the -D flag to pass the credentials. Using H2O with YARN When you launch H2O on Hadoop using the hadoop jar command, YARN allocates the necessary resources to launch the requested number of nodes. H2O launches as a MapReduce (V2) task, where each mapper is an H2O node of the specified size. Hadoop jar h2odriver.jar -nodes 1 -mapperXmx 6g -output hdfsOutputDirName Occasionally, YARN may reject a job request.

This usually occurs because either there is not enough memory to launch the job or because of an incorrect configuration. If YARN rejects the job request, try launching the job with less memory to see if that is the cause of the failure. Specify smaller values for -mapperXmx (we recommend a minimum of 2g) and -nodes (start with 1) to confirm that H2O can launch successfully. To resolve configuration issues, adjust the maximum memory that YARN will allow when launching each mapper. If the cluster manager settings are configured for the default maximum memory size but the memory required for the request exceeds that amount, YARN will not launch and H2O will time out. If you are using the default configuration, change the configuration settings in your cluster manager to specify memory allocation when launching mapper tasks. To calculate the amount of memory required for a successful launch, use the following formula.

How H2O runs on YARN Let’s say that you have a Hadoop cluster with six worker nodes and six HDFS nodes. For architectural diagramming purposes, the worker nodes and HDFS nodes are shown as separate blocks in the block diagram, but they may actually be running on the same physical machines. The hadoop jar command that you run on the edge node talks to the YARN Resource Manager to launch an H2O MRv2 (MapReduce v2) job.

The Resource Manager places the requested number of H2O nodes (aka MRv2 mappers, aka YARN containers) – three in this example – on worker nodes. See the picture below. Once the H2O job’s nodes all start, they find each other and create an H2O cluster (as shown by the dark blue line encircling the three H2O nodes). The three H2O nodes work together to perform distributed Machine Learning functions as a group, as shown below. Note how the three worker nodes that are not part of the H2O job have been removed from the picture below for explanatory purposes. They aren’t part of the compute and memory resources used by the H2O job. The full complement of HDFS is still available, however.

Prerequisites. Linux kernel version 3.8+ or Mac OS X 10.6+. VirtualBox. Latest version of Docker is installed and configured. Docker daemon is running - enter all commands below in the Docker daemon window.

Using User directory (not root) Notes:. Older Linux kernel versions are known to cause kernel panics that break Docker.

There are ways around it, but these should be attempted at your own risk. To check the version of your kernel, run uname -r at the command prompt.

The walkthrough that follows has been tested on a Mac OS X 10.10.1. The Dockerfile always pulls the latest H2O release. The Docker image only needs to be built once.

Walkthrough Step 1 - Install and Launch Docker Depending on your OS, select the appropriate installation method:. Note: By default, Docker allocates 2GB of memory for Mac installations. Be sure to increase this value. We normally suggest 3-4 times the size of the dataset for the amount of memory required. Step 2 - Create or Download Dockerfile Note: If the following commands do not work, prepend them with sudo.

Create a folder on the Host OS to host your Dockerfile by running:. Next, either download or create a Dockerfile, which is a build recipe that builds the container. ## ## ## ## ## ## ## ## / ' / / - o / / / docker is configured to use the default machine with IP 192.168.99.100 For help getting started, check out the docs at After obtaining the IP address, point your browser to the specified ip address and port to open Flow. In R and Python, you can access the instance by installing the latest version of the H2O R or Python package and then initializing H2O.